Everyone Has Access. Few Have Fluency.

Giving your team access to AI is like handing them a dictionary and expecting them to write poetry.

The most dangerous assumption in AI adoption is that availability equals capability. Walk into any organization that's "implemented AI" and you'll find the same pattern: expensive tools sitting unused, confused teams avoiding the technology, and executives wondering why their AI investment isn't delivering results.

The corporate delusion is epidemic. "We bought ChatGPT licenses for everyone, so we're AI-ready." It's like saying you're ready to perform surgery because you bought a scalpel.

The future belongs to organizations that develop AI fluency, not just AI access.

The Access Illusion

Here's what actually happens when you give teams AI access without fluency: most tools sit unused or underused within weeks of deployment. Teams know AI exists but don't know how to make it work for them. The competence cliff is steep—they fall from enthusiasm to avoidance quickly.

The shadow usage problem is worse. Employees use AI tools poorly, creating more problems than solutions. They generate content that sounds sophisticated but lacks substance. They make decisions based on AI outputs they don't understand. They build dependencies on systems they can't evaluate.

The training fallacy compounds the problem. One-time workshops don't create lasting capability. Feature-focused training teaches what buttons to push instead of when to push them. Tool-centric curricula focus on specific platforms instead of underlying principles.

The result: teams that are technically "AI-enabled" but practically AI-helpless.

What Fluency Actually Looks Like

Fluency is the ability to think with AI, not just around it. It's the difference between using AI tools and being enhanced by them.

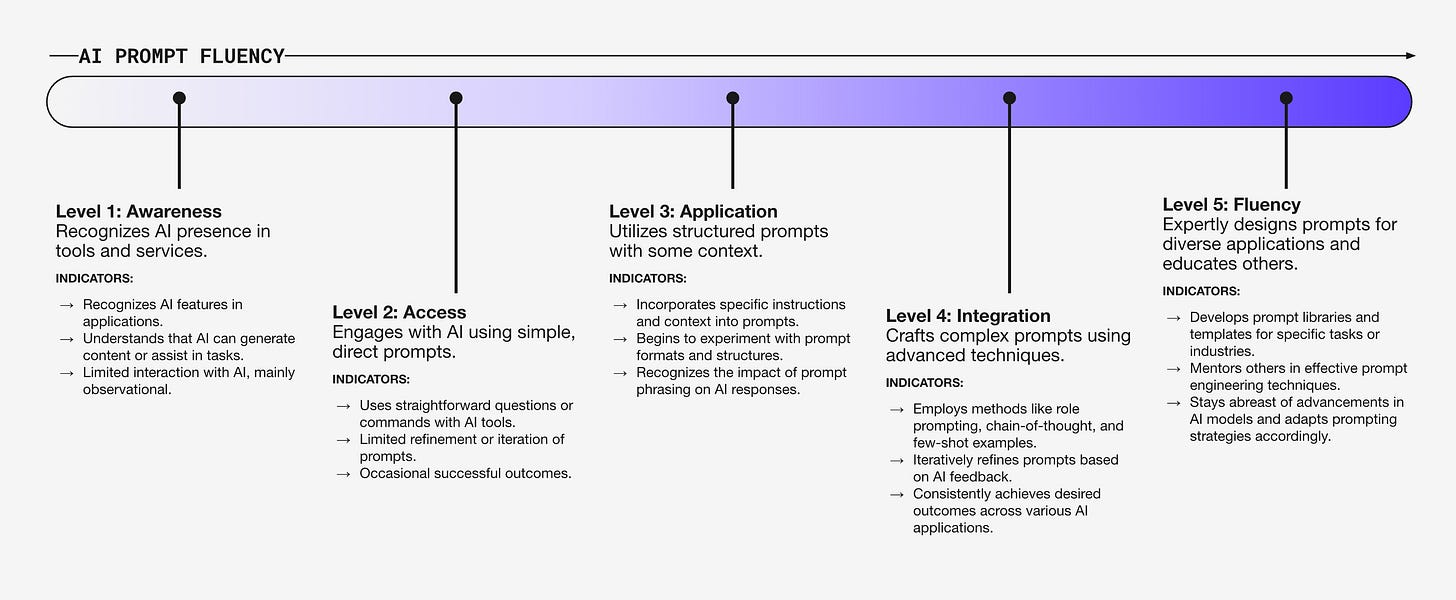

The Fluency Spectrum:

Level 1 - Awareness: "I know AI exists"

Level 2 - Access: "I can use AI tools"

Level 3 - Application: "I can apply AI to specific problems"

Level 4 - Integration: "I can weave AI into my workflow"

Level 5 - Fluency: "I can think strategically about when and how to use AI"

Most organizations get stuck at Level 2. They have access, but they don't have insight. They can generate AI outputs, but they can't evaluate AI quality. They can follow AI instructions, but they can't adapt AI responses.

Fluent users don't just use AI tools. They think differently because AI tools exist.

Fluency indicators include:

Prompt sophistication: Understanding how phrasing shapes AI responses

Context awareness: Knowing when AI helps and when it doesn't

Output evaluation: Distinguishing between good and poor AI results

Strategic application: Using AI to enhance thinking, not replace it

The gap between access and fluency is the gap between having a tool and knowing how to use it well.

Why Most AI Training Fails

Organizations treat AI fluency like software training when it's actually literacy development. The difference is profound.

Software training teaches you to use a specific tool. Literacy development teaches you to think in a new way. Software training has a clear endpoint. Literacy development is ongoing.

The common mistakes are predictable:

Feature-focused training: Teaching what buttons to push instead of when to push them

One-size-fits-all approach: Identical training for different roles and skill levels

Tool-centric curriculum: Focusing on specific platforms instead of underlying principles

Event-based learning: Single workshops instead of sustained development

AI fluency develops through practice, not presentations. Through iteration, not instruction.

The Fluency Development Framework

Build fluency systematically, not accidentally.

Stage 1: Foundation Building

Mental model development: How AI works conceptually

Workflow mapping: Where AI fits in existing processes

Use case identification: Specific problems AI could solve

Quality recognition: What good AI output looks like

Stage 2: Skill Building

Prompt engineering: Crafting effective AI interactions

Context management: Providing AI with relevant information

Output refinement: Improving AI results through iteration

Integration patterns: Incorporating AI into daily work

Stage 3: Strategic Thinking

Opportunity recognition: Identifying new AI applications

Risk assessment: Understanding AI limitations and pitfalls

Value evaluation: Measuring AI's impact on work quality

Continuous improvement: Evolving AI usage over time

Fluency isn't a destination. It's a development process that compounds over time.

The Organizational Fluency Challenge

Individual fluency doesn't automatically create organizational fluency. The scale problem is real: some teams become fluent while others lag behind. Knowledge silos develop when fluent individuals don't share learnings systematically.

Cultural resistance emerges when non-fluent team members feel threatened or excluded. Quality variance becomes obvious when some teams produce excellent AI-enhanced work while others produce garbage.

The systematic solution requires:

Community of practice: Regular forums for sharing AI learnings

Mentorship programs: Fluent users help develop others

Quality standards: Consistent criteria for AI output evaluation

Usage guidelines: Clear frameworks for when and how to use AI

Progress tracking: Systematic measurement of fluency development

Fluency spreads through culture, not policy. Through practice, not pronouncement.

The Competitive Advantage of Fluency

While others focus on AI access, fluent organizations focus on AI application. The fluency edge is measurable: fluent teams make better decisions faster. AI enhances rather than replaces human insight. Fluent organizations integrate new AI tools quickly.

The compound effect is powerful:

Year 1: Fluent teams work more efficiently

Year 2: Fluent organizations develop better strategies

Year 3: Fluent companies create new competitive advantages

AI fluency isn't a nice-to-have. It's the new digital literacy.

The Measurement Challenge

How do you measure something as complex as AI fluency? You track usage frequency, application breadth, output quality, integration depth, and strategic impact.

Assessment methods include skill demonstrations, peer reviews, output analysis, case studies, and progress tracking. What gets measured gets developed. Without fluency metrics, you can't build fluency systematically.

Building Your Fluency Strategy

Develop organizational AI fluency as a strategic capability:

Assess current state: Where is your organization on the fluency spectrum?

Identify fluency champions: Who are your natural AI adopters?

Create learning pathways: Systematic development for different skill levels

Build practice opportunities: Safe spaces to experiment and learn

Measure and iterate: Track progress and adjust approach

The cultural elements matter: psychological safety, recognition systems, resource allocation, leadership modeling, and continuous learning.

Fluency isn't something you achieve. It's something you cultivate.

Fluency gets you to the starting line. Context gets you to the finish line. Next, we'll explore AI's real competitive advantage.